Rich

Phonology:

Some Background Material

Robert F.

Port

Indiana University

1. Formal linguistics assumes a

low-dimensional fixed phonetic segment inventory.

Chomsky,

N., &

Halle, Morris

(1954) The

strategy of phonemics. Word

10, 197-209.

This

is an early paper by Halle of some historical interest, in which some

strikingly hopeful views about phonetics are

voiced. He points out most people assume ``we speak

in a manner quite similar

to the way in which we write'' -- that ``speech consists of

sequences of discrete sounds which are tokens of a small number of

basic types'' -- direct auditory analogs of graphical letters.

However, acoustic analysis of speech sound has shown, he says, that

speech is

``a

continuous flow of sounds, an unbroken chain of movements.'' He

hypothesizes (as he must) that the ``acoustical wave must contain clues

which enable human

beings to'' segment speech into into discrete events. (I believed

that myself for many years.)

``If we could

state what these clues are, we could presumably build a machine to

perform the same operation.'' Exactly. But

unfortunately no such

machine has been

built in the succeeding half century. In 1954, it may have seemed

a good bet that phoneticians and speech engineers would soon succeed.

But in 2006, it is no longer reasonable to assume that a low-D

description of the physical form of language that is speaker

independent and segmented into Cs and Vs will still be

discovered. We simply must give up the hope for an apriori

phonetic description that is both physical and acoustic, yet also

abstract and linguistic.

Haugeland,

John (1985)

Artificial Intelligence: The Very Idea. (

Haugeland's goal was to clarify the

assumptions of formalist models of cognition. These basic

assumptions are shared across computing, logic, artificial intelligence

and formal models of language and cognition. Major proponents of this

view include Chomsky,

Newell and Simon,

Fodor, Pylyshyn, Pinker, etc. Computers are

carefully engineered so that they can be interpreted in terms of

discrete

states only. Programmers (unlike computer engineers) do not need

to concern themselves with continuous time or continuous values of

voltage. Formal

linguistics always assumes an apriori set of

letter-like phonetic tokens. Thus it must presume the

physical existence of a fixed alphabet of phonetic symbols for spelling

linguistic items, as well as a discrete computational apparatus for

token manipulation. All

formal

linguistic theories build upon this assumption even though it is surely

very implausible

and the empirical claim of a universal phonetic alphabet has scarcely

even been defended by phonologists. (I am sure there must have

been

attempts, but I'm not familiar with them.) Chomsky and Halle

(1968) took

it to be intuitively obvious that such a fixed and small

inventory (under

a few hundred) of universal phonetic tokens exist. So the

problem, I claim, is that no such low-dimensional description of

phonetics is possible (See Port and Leary, 2005). Therefore

formal linguistics is impossible.

2. Segments are not compatible with spectrographic

representations.

IPA,

1999. Handbook of the

International Phonetic Association. Introduction.

(pp 3-13, 27-30, 32-38).

This

thoughtful and wide-ranging chapter of the recent edition of the IPA Handbook (by Prof. John

Wells of

Klatt, Dennis (1979) Speech

perception: A model of acoustic phonetic analysis and lexical access.

Journal of Phonetics 7,

279-342.

Klatt presents two generic models for

recognizing speech in this important paper: LAFS

and Scriber.

LAFS (``lexical access from spectra'') recognizes words specified in

raw acoustic form. No segment level at all! Given all the

variations due to

context, speaker, etc, this implies there will be many alternative

acoustic

representations

for each speech chunk (whether word, phrase or what). Today, all

effective speech recognition systems work basically this way, usually

implemented as hidden Markov models on sequences of spectral

shapes (specified in a rather large `alphabet' of spectral

shapes).

But Scriber works

the

``right''

way (according to the insights of linguistics) since it converts

spectra into abstract

segmental, letter-like units, and then recognizes words and other

speech chunks using the resulting transcriptions. Back in the

1980s, my Indiana

colleague

David Pisoni always liked this paper and thought that LAFS was a model

that deserved serious consideration. But I, as a linguist-phonetician,

thought that LAFS could not

possibly be correct. I remember being almost

annoyed

that Dennis Klatt even suggested such a thing. ``Oh well,'' I thought,

``he

is just an engineer. Psychological plausibility is not so important for

engineers.'' This paper

appeared in

1979, only 3 years after I got my PhD, and now, 30 years later, I

realize that the general approach of

LAFS was the right choice all

along! And is, in fact, the only psychologically

plausible approach. The

Scriber approach, despite its

great intuitive appeal to any alphabet-literate person, is not

guaranteed to work.

Huckvale, Mark (1997) 10 things engineers

have discovered about speech recognition. NATO ASI Workshop

on Speech Pattern Processing. pp. 1-5.

This paper has many surprises for

linguists. ``This paper is about what happens when you put

Performance first'' -- relative to Competence. Huckvale has many

humbling things to say to linguists.

Ladefoged, Peter (2004) Phonetics and phonology in

the last 50 years. Paper presented at `From Sound to Sense',

a conference at MIT.

This interesting paper on the history of

phonetics presents his concerns about the

gross mismatch between segmental

linguistic descriptions of

speech and the acoustic signal. He concludes rather disturbingly:

``phonologists have the problem of deciding whether they are describing

something that actually exists, or whether they are dealing with

epiphenomena, constructs that are just the result of making a

description.'' My conclusion, of course, is the latter, and I

think Ladefoged's was as well.

Ladefoged, Peter

(2005) Features

and parameters for different purposes. Paper presented to

the Linguistic Society of America, 2005.

This paper presents Ladefoged's final views on phonetics and phonology before his passing in January, 2006. Using generally different arguments than mine, he concludes that a full description of speech requires far more degrees of freedom than Chomsky and Halle propose and that a grammar could not possibly be ``just something in some speaker's mind.'' Instead he proposes that the phonology of a language is ``a social institution,'' while ``the acts of speaking and listening all involve adjusting articulatory parameters not phonological features.'' On the other hand, he says, ``phonological features are best regarded as artifacts that linguists have devised in order to describe linguistic systems.'' Although he does not develop these ideas much beyond these statements, this appears to be essentially identical to my position: phonology is a social institution existing on a slow time scale and real-time language processing makes no use of abstract phonological descriptions at all.

3. Variation is endless when you look close.

Labov, William. (1964) Phonological

correlates of social stratification. American Anthropologist 66, 164-176.

The

first paper is Labov's fascinating MA thesis and the second reviews the

results of his dissertation on language variation on the Lower

East Side of NYC.

Labov revealed vividly the subtlety in dialect variation. Of

course, each

individual speaks a different dialect, but in fact, we each speak in

many different

styles of pronunciation detail. Our theories of language presume

there

is a linguistic state, something we could call `The English Language'

or maybe

`Lower East Side English' -- in any case, some invariant Grammar.

But Labov's data show

us (in my view, not necessarily his) that no such

entities are definable in fact. Everything is always subject to

subtle phonetic variation. All we can know about a language

is distributional patterns in a high-D auditory/phonetic space.

Traditional theories of language presume that speaker and hearer share

some common Grammar. But the fact is they never ever

share the same grammar! And every word has an unlimited

number

of

variants! So why do linguists imagine they could describe

some dialect

or other in discrete low-D terms?

Bybee, Joan (2002) Word frequency and context of use in the lexical diffusion of phonetically conditioned sound change. Language Variation and Change 14, 261-290.

Bybee and other variationists have explored the ways that the

distribution of

variants for any word is influenced by such properties as the frequency

of occurrence of each

individual word -- as well as many other factors.

If every word has a bazillion variants, what can it mean to say ``I

know Word X''? Apparently it must mean either (A) ``I know the

list of (essentially) all variants'' or, perhaps, (B) ``I am able to

evaluate utterances for their similarity to the

whole distribution of variants of Word X.'' This does not

resemble what generative phonologists are doing -- but they too think

they are

explicating what it means to know Word X.

4.

Long-term

memory, including memory for words, is far richer

than we thought.

Palmeri,

T. J., Goldinger, S. D., & Pisoni, D. B. (1993). Episodic

encoding of voice attributes

and recognition memory for spoken words. Journal of

Experimental

Psychology, Learning, Memory and Cognition, 19,

309-328.

This paper presents powerful evidence

from a `recognition memory' task

that our memory for speech is not abstracted away from speakers'

voices, as linguists and phoneticians tend to think (as claimed

explicitly by Morris Halle, 1954 and 1985). We actually store

lots of

phonetic

detail including speaker idiosyncrasies. This set of results

troubled

me for over a decade until I finally decided that I should try

to consider that the data might actually mean what they seem to mean --

that an

abstract phonological transcription of speech does not

do the primary job of linguistic memory and does not limit what is

stored in memory.

This mental exercise is what led to the revolutionary

theory being presented here. One implication of these

results is

that we store far more detailed information about speech than we

linguists

ever imagined. It also implies we store speech in a very

concrete,

auditory fashion -- not using abstract speaker-independent and

context-free descriptors. Thus, a memory including specific

examples (as well as prototypes and abstract

specifications) is implicated. It turns out that lots of data

from

many areas of psychology have been implicating such representations for

a long time.

Hintzman,

This, the granddaddy of mathematical memory models, is called

Minerva2,

and is easier to

understand than most since the math is simple linear

algebra. You

will see how a rich and detailed memory of concrete exemplars with no

abstractions can perform better on training tokens yet still allow

abstractions (and prototypes) to be computed on the fly whenever

needed. Many more recent models (e.g., by Nosofsky and Shiffrin)

use

different mathematics but retain a key role for the training exemplars

to predict results. (The pic: Doug

Hintzman.)

O'Reilly, Randall and Kenneth Norman (2005) Hippocampal

and

neocortical contributions to memory: Advances in the complementary

learning systems

framework. Trends in Cognitive Sciences 6,

505-510.

This proposes

a neural model for `episodic memory,' that

is,

memory of randomly coocurring events (eg, the words you hear

someone

say to you, or the specific events that happened on the way to work

this morning) that represents stored information on a single trial.

Gluck, M., M.

Meeter and C.E. Meyers (2003) Computational

models of the

hippocampal region: linking incremental learning and episodic

memory.

Trends in Cognitive Science 7, 269-276.

The point of these papers is that there are neurologically plausible

mechanisms that can learn details of specific examples of complex,

multimodal

episodes on a single trial without repetitive

training. These papers suggest that a surprising amount of rote

material

could be available in the memory of speaker-hearers. Remembering

all examples forever is not plausible, but we apparently remember much

richer detail -- including language detail -- than most of us imagined.

Any implications for the study of language? Of course.

Pisoni,

D. B. (1997). Some

thoughts on `normalization' in speech perception. In K. Johnson

& J.

Mullennix (Eds.), Talker variability in speech processing (pp.

9-32).

Lachs,

Lorin,

Kipp McMichael and D. B. Pisoni (2000) Speech perception and

implicit

memory: Evidence for detailed episodic encoding of phonetic

events. In J. Bowers and C. Marsolek (eds.)

Rethinking Implicit Memory

(Oxford:

Oxford Univ Press).

Lachs,

Lorin,

Kipp McMichael and D. B. Pisoni (2000) Speech perception and

implicit

memory: Evidence for detailed episodic encoding of phonetic

events. In J. Bowers and C. Marsolek (eds.)

Rethinking Implicit Memory

(Oxford:

Oxford Univ Press).

Also in Research on Spoken Language

Processing, 24, 149-167 (Psychology Dept, Indiana University).

Pisoni

and colleagues draw the implications

for a theory of language of the fact that people use an

episode-like memory rather than an `abstractionist' model of speech

(like phones or

phonemes). Lachs et al. point out that the traditional

perspective on speech perception assumes that linguistic information

and contextual information are stored independently. Instead they

argue their data support a ``single complex memory system that retains

highly detailed, instance-specific information in a perceptual record

containing all of our experiences -- speech and

otherwise.''

They are calling for a new kind of linguistics that lets go of the

assumption of discrete abstract units. Such a theory of language

is what I am now trying to initiate with this website.

Pierrehumbert,

Janet (2001) Exemplar

dynamics: Word frequency, lenition and contrast. In J. Bybee

and P.

Hopper (eds) Frequency Effects and the Emergence of Linguistic

Structure. (Benjamins,

She explains exemplar models (based on Hintzman) in her own

way and

addresses the question of how an exemplar model of memory might make

predictions about speech production. She proposes that the

perceived

(and stored) distribution can be a direct model of production

probabilities. So her algorithm for choice in speech production

is

simply the distribution of perceived tokens treated as a probability

distribution

of production targets selected from randomly. This is one

of the first attempts

by a

linguist to consider the implications of detailed exemplar memory

representations of speech.

Johnson,

Keith (2005, mspt) Decisions

and mechanisms in

exemplar-based phonology. UC Berkeley Phonology

Lab Annual Report 2005. Johnson considers some

issues that will trouble linguists about exemplar models of memory for

language.

5. What modality are phonetic

targets?: articulatory, auditory, somatosensory?

Liberman,

Alvin M., Franklin S. Cooper, Donald P. Shankweiler and Michael

Studdert-Kennedy

(1968) Perception

of  speech

code. Psychological

Review 74,

431-461. Reproduced in J. Miller, R. Kent and B. Atal

(eds.) (1991) Papers in Speech

Communication: Speech Perception. (Acoustical Society of

America, Woodbury, New York).

speech

code. Psychological

Review 74,

431-461. Reproduced in J. Miller, R. Kent and B. Atal

(eds.) (1991) Papers in Speech

Communication: Speech Perception. (Acoustical Society of

America, Woodbury, New York).

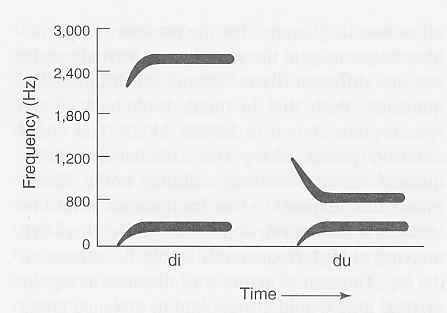

A monumental review paper summarizing the

Haskins Labs

view of speech perception arguing for the `motor theory of speech

perception'. This group thought that words must be

specified in

memory in low-dimensional, segmental and articulatory terms, so

the di/du

problem (illustrated at right) was major. But if auditory

memory is

very large, then speakers can just store context-sensitive formant

trajectories and burst spectrum shapes. The fact that di and du

begin with what we call ``the same sound'' may be something that is

noticed by most speakers

only after learning to write

with an alphabet. At least

this issue about what speech sounds ``sound the same'' needs more

research.

Lindblom, Björn (2004)

The organization of speech movements: Specification of units and modes

of

control. Paper presented at conference `From Sound to Sense'

at

MIT. A survey of speech production theories.

Diehl,

Randy, Andrew Lotto and Lori Holt (2004) Speech

perception. Annual Review of Psychology 55,

149-179. Presents that case that, no

matter what, the

basic representations of word targets must be auditory.

Gallantucci,

Fowler and Turvey (2006) The

motor theory

of speech perception reviewed. Psychonomic

Bulletin and Review 13,

361-377.

Guenther, Frank (1995)

Speech sound acquisition,

coarticulation and rate effects in a neural network model of speech

perception. Psych Review 102, 594-621.

Guenther,

Frank and Joseph Perkell (2004) A

neural model of speech

production and supporting experiments. Paper presented at `From

Sound to

Sense', conference at MIT, June 2004. Available at

http://www.rle.mit.edu/soundtosense/.

Speech sounds must have both convenient articulatory properties and practical auditory properties. Diehl, Lotto and Holt argue for a speech perception process using only general auditory capabilities (rather than unique specialized perceptual skills that are used for speech and nothing else). Clearly, my position is closer to theirs than to the traditional Haskins Labs view. Guenther's feedforward production system just produces gestures -- normally with no feedback. But the primary realtime guidance for them is based on somatosensory (ie, orosensory) feedback (from muscles, joints and skin surfaces). So the best answer to a question about realtime targets is: the targets are somatosensory, and neither gestural nor auditory. So I don't agree (with Galantucci-Fowler-Turvey) that ``perceiving speech is perceiving gestures'' (their claim number 2), it is just perceiving speech. But their claim 3 that ``the motor system is recruited for perceiving speech'' seems to be true and provides a coherent, reliable coding method for storing speech patterns. (See Stephen M. Wilson, 2004, Listening to speech activates motor areas involved in speech production. Nature Neurosci.)

The issue regarding short-term memory (STM) is how words

are stored for

short periods of

time -- under 20 sec or so. Baddeley uses the term `phonological

loop'

for the system that

stores a small number of words for a few seconds. Linguists might

assume that he means the same thing they mean when using the term

``phonological''. But his data show that this

code is either an auditory code or an articulatory one -- but it is

definitely not the kind of

abstract, segmented, speaker-invariant code that linguists think of

when they

use this term. So the code is either motor or auditory (or

both). Note that Baddeley claims the phonological loop has two

parts

-- a

store or buffer (which exhibits confusion between words that sound

similar even

if articulated quite differently) and a (subvocal) motor representation

used

for rehearsal (which accounts for why Ss can remember fewer longer

words than

shorter ones, ie, the `word-length effect.'

Baddeley, Alan, D. (2002) Is

working memory still working? European

Psychologists 7, 85-97

In

this paper Baddeley extends his earlier model in a useful way by added

an `episodic

buffer'

-- a store for information from many modalities that is retained

for

awhile.

7. Why

are letters so convincing despite all the evidence? Because of

our education.

Olson, David R.

(1993) How

writing represents

speech. Language and Communication

13. 1-17. This article is a revised

version of Chapter 4 in his outstanding and highly recommended book: The World on Paper: The Conceptual and

Cognitive Implications of Writing and Reading. (Cambridge:

1994). He points out that various human notions of what

language is are invariably based on whatever we represent with our

writing system. So the idea that our alphabetical writing is a

`cipher' (one-to-one replacement) from phonemes into graphemes has

reversed the true order! We linguists tend to believe our

language is

made from phonemes

primarily because we use

letters to represent speech.

Faber,

Faber,

Faber

anticipated the argument I am making here over a decade

ago. Her

paper has been largely ignored, as far as I can tell. She

argued that

languages seem to be composed from letter-like segments primarily

because we have all been trained in alphabetic literacy.

Linguistics.

(Oxford Univ Press,

Linguistics.

(Oxford Univ Press,

These papers show quite clearly that

a segmental description of speech (in terms, that is, of consonants and

vowels)

may be intuitively persuasive for us. But it is

not

universal -- indeed most humans, through our history, have not had such

intuitions.

It appears that some amount of alphabet training is necessary for

people to hear speech in terms of Cs and Vs.

Ziegler,

Johannes and Usha Goswami (2005)

Reading

acquisition, developmental dyslexia and skilled reading across

languages: A

psycholinguistic grain size theory. Psych Bulletin 131, 3-39.

This

review paper strongly endorses the view that the alphabetical

description of

language

(using phonemes or letters) is a result of learning how to do

alphabetical

reading and writing. It also demonstrates that the inconsistencies in

the

spelling of English greatly complicate the problem of learning to read.

Children taught to read Finnish or Italian can read new words as well

after one

year of school as English-speaking children can do after 3 years of

school. Of course, the latter's practical reading skill may still

be better than a

child

learning to read Chinese after the same amount of

training.

Studies of reading reveal clearly how an understanding

of language in terms of phonemes is almost entirely

a consequence of learning how to read with an alphabet. This

direction of causality agrees

with my general story that segmental descriptions of speech are shaped

and reinforced by extensive practice at reading and writing. But

this

result is in serious

conflict with the conventional views of linguists and psychologists

about

language. The traditional view insists that learning a language

and

remembering vocabulary are skills that depend upon letter-like

phonemes. Some set of letter-like units are said to be available in

advance to all members of the

species. On this view, all

speakers, whether literate or not, should store language using a

cognitive

alphabet. But the data do not support any such predictions.

Of

course, there is the question

of how the alphabet could have been

invented if phonemes lack this intuitive basis. But it should be

kept in mind that

the

early Greek alphabet was the result of roughly 3000 years of efforts in

the middle east to engineer

a culturally transmittable writing system that was easy to

teach and use. And the alphabet

was only

invented once -- as has been pointed out many times. I would

claim

that those Greek `writing engineers' (standing on the shoulders of

earlier middle-eastern writing engineers) actually invented the phoneme

concept itself. The phoneme is the ideal, the conceptual

archetype, for which all alphabetical orthographies are just imperfect

implementations.

Robert F. Port, April 9,

2008