Evolution can be seen as a way of solving problems through a process of "survival of the fittest". Biological evolution is just the best-known example of evolution. In biological evolution, traits that lead to survival and the possibility of reproduction are passed on from generation to generation and tend to dominate traits that are counter-productive. Cultural evolution is another example. The field of evolutionary computation seeks both to model natural evolution and to use ideas from natural evolution to solve practical problems.

For evolution, either natural or artificial, to work, we need at least the following.

How it works

Genetic algorithms (GAs) are a search technique, a way of solving problems using a form of reinforcement learning based on evolution. Here is another site where you can learn about genetic algorithms. GAs are used for

These are problems in which the candidate solutions consist of the settings of multiple parameters. Consider an old-style television with dials labeled with things like "vertical hold" and "contrast". Some combination of settings for these dials results in a good picture, but the viewer is clueless about what combination is the right one. The parameters in this case are the dials, and the search problem is to find the right combination of settings. To apply a GA to a problem like this, we treat each candidate solution as a genome in a population and evaluate each in terms of its fitness, in this case, in terms of the quality of the TV picture that results from the settings.

GAs may be used to study biological evolution itself. An artificial life simulation is set up in which the behavior of creatures depends (at least in part) on their genomes and in which creatures pass on their traits to their offspring. The researcher observes how the fitness of the population varies over different generations and what sorts of solutions the creatures evolve.

Neural networks learn by adjusting their weights on their connections, but where do the connections come from in the first place? That is, what wires up the neural architecture in which neural network learning takes place? In real life, much of this is apparently the job of evolution: some neural structure is innate. One area of research combines GAs with neural networks: the neural network architecture (numbers of units, patterns of connectivity joining them, learning algorithms, etc.) is coded for in the genome, and neural network learning adjusts the weights on the connections in the network.

In designing a GA to solve a problem, we first need a way of representing the relevant traits in the genome, the genetic encoding. The genome is usually a binary string. Features which are not binary may be encoded in sets of positions. For example, if we want to represent the settings of five TV dials and each dial has eight possible settings, we could use genomes consisting of 15 bits (0s or 1s). There would be three bits for each dial because it takes to three bits to represent eight different numbers. The genome is a code. There must be a way for it to be translated into something that is relevant for fitness. The most direct approach is to have a fitness function that takes the genome itself and returns a fitness value. This is what happens in the simple example below. A less direct approach, more like real life, is to have the genome code for the behavior of the creature that contains it, that is, to distinguish between the genome and the phenome. Fitness is then evaluated on the basis of the behavior rather than the genome itself. This is what we will do in the SMARTS GA.

There must be a way for individuals to be evaluated, a fitness function. In most GAs, the fitness function assigns a numerical score to each genome (or to the behavior of the phenome). Of course the fitness function is not known or understood by the designer of the GA; if it were, there would be nothing to search for since the solution would obvious. Alternately the function may be built into the environment, allowing some creatures (at least in part because of their traits) to survive long enough to reproduce and preventing others (again because of their traits) from reproducing. This is how the fitness function will be implemented in SMARTS.

Individuals must somehow be selected for reproduction on the basis of their fitness. There are two sorts of selection procedures. Selection is usually indirect: the individuals' chance of reproducing is a function of their fitness. For example, the parents may be selected probabilistically on the basis of their proportion of the total fitness of the population. In models that are more like biological evolution, selection may be direct: more successful individuals are better able to "find" mates than less successful individuals.

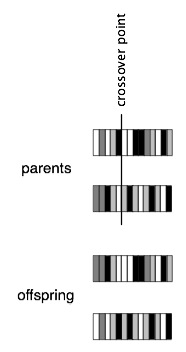

Reproduction in GAs usually makes use of an operation called crossover. For each pair of parents, a crossover point is selected randomly within their genomes. One of the offspring gets the first part of the genome (everything before the crossover point) from parent A and the second part of the genome from parent B. The other offspring gets the first part of the genome from parent B and the second part from parent A.

While crossover allows for the combination of the genomes of successful individuals, only features that appear in one of the parents' genomes can appear in the offsprings' genomes. Mutation permits a GA to go beyond the features in the current population. With a small probability, the mutation rate (say, 0.001), each of the bits in a new genome created during reproduction is flipped (1 changed to 0 or 0 changed to 1). Mutation can create a feature that would not exist otherwise and prevent the population from converging too soon. It performs the same function as exploration in reinforcement learning.

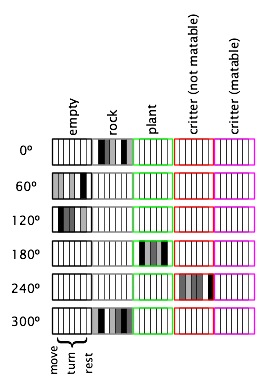

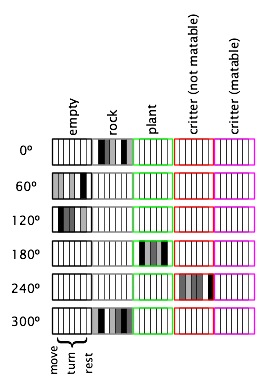

The task of the critters in the evolution version of SMARTS is essentially the same as that in the learning version: to figure out how to behave in different states. An agent's states are what its sensors detect of the world around it. In the SMARTS program, critters have only simple touch sensors which can tell the texture of one or more of the cells that immediately surround the critter. In the evolution version of the program, there are five possibilities for the contents of a cell: either it is empty or it contains a plant, a rock, or another critter, and two kinds of critters can be distinguished, those that are "in heat" (possible mates) and those that are not. All five of these "textures" are distinguishable by the critter's sensors, and these represent the possible states that are the basis for the critters' behavior.

Critters have seven possible actions: they can attempt to move forward one cell, turn in one of the five possible directions, or do nothing (rest). What happens when a move is attempted depends on what's in the cell in front of the critter. If the cell is empty, the critter goes to that cell. If the cell contains a plant, the critter moves to the cell and eats the plant in the process. If the cell contains a rock, the critter "bumps" into the object and stays where it is. If the cell contains another critter, what happens depends on the "matability" of the two critters. If both are matable, the two critters mate and give birth to two offspring. See below for details of matability and mating. If one or the other or both of the critters are not matable, the critter that attempted to move just "bumps" into the other critter as if it were a rock and stays where it is.

We need a genetic encoding scheme to relate sensory states to actions so that agents that make the "right" decisions (for example, that choose to move onto plants or to matable critters) can pass on their fit genes to the next generation. Given six touch sensors (for each of the cells surrounding the critters), each of which can detect five different textures, there are 56 = 15,625 different states that the critters can be in. However, as with the neural network approach to reinforcement learning, we can use fewer resources by representing states in a distributed by allotting a section of the genome to each combination of a single sensor and texture. For example, one section of the genome represents the "empty" texture for the sensor that feels the cell directly in front of the critter. We need 5 × 6 = 30 of these sections. For each position-texture section, we need to represent the relative goodness of each of the seven actions; each section is thus divided into seven subsections. We need 5 × 6 × 7 = 210 of these position-texture-action subsections. Each of these subsections must be able to hold a quantity that represents the relative goodness of that action when the critter senses that texture in that position. Since the individuals genes in the genome are normally bits (0 or 1), they only allow us to distinguish two different values. For finer-grained distinctions, we need multiple genes for each value. With two genes, we can distinguish four different values, for example. Given this level of granularity, which is what is used in SMARTS, each genome consists of 5 × 6 × 7 × 2 = 420 binary values.

The figure below shows the relevant portions of a possible genome for a critter that is surrounded by a rock, two empty cells, a plant, another critter, and another rock (in clockwise order starting in the position in the direction of the critter's heading). The genome is shown broken into six sections for convenience. Each small rectangle stands for two binary values, with the brightness signifying the value that is represented by the two numbers (white = 0, black = 3). For example, the first rectangle in the rock section of the 0° is white, indicating that the critter currently "believes" that moving when there is a rock directly in front of it is not beneficial. The black rectangle directly to the right of this one represents the high valuation of turning clockwise 60° in this situation.

Each critter starts with an initial strength of 75. When the critter takes an action, it receives an reinforcement, which is added to its current strength (except that the strength can never exceed 100). There are five possible reinforcements that are built into the world in the evolution version of SMARTS; you can change any of these by selecting "Change params" in the Control window.

Critters can only mate when they are "matable". Matability depends on two properties of a critter: (1) whether the number of time steps since its last mating or since its birth is greater than a constant parameter called the "mating refractory" and (2) whether its strength is at least as great as the value of mate punishment. The first criterion prevents critters from mating many times with the same other critter (leading to less diversity in the population) and from mating before they have had a chance to be tested in the world. The second criterion prevents unfit critters from mating (and passing on unfit genes to the next generation). The mating refractory parameter starts at 5, but you can change it by selecting "Change params".

When two critters mate, they produce two offspring. Each of these receives a portion of the genome of each of its parents, through the crossover process described above. Crossover is illustrated in the figure below for individuals with much smaller genomes than those in SMARTS.

Following crossover, mutation is applied to the two new genomes. With a small probability, the "mutation probability", each gene in the genome is flipped from 0 to 1 or 1 to 0. As with the other parameters, you can change the mutation probability by selecting "Change params" in the Control window.

To simplify the evolutionary process, it is usual to keep the population size constant from one generation to the next. In the SMARTS program, this is achieved through a mechanism similar to reincarnation. Whenever two new critters are born, the two weakest critters in the population are identified and the new critters effectively take over their bodies, starting their lives wherever the weak critters were but with their new genomes.

On each time step, each critter calls its touch sensors, receiving information about the textures in the six cells around it. It then examines its genome, considering only the portions of the genome relevant for its sensory state. For example, if the critter detects a rock texture directly in front of it, from within the portion of the genome responsible for what's in front of it, it only needs to examine the fifth of the portion that represents knowledge about rocks in this position. Knowledge about other textures in this position is irrelevant. For each of the seven actions, it adds up the values stored in the genome for those actions, given the textures that appear in the six positions around it and selects the action with the highest total value. (If there is a tie for the highest, it randomly picks one.) The critter then takes this action, receiving the appropriate reinforcement from the world.

You can observe evolution taking place in several ways. First, you can watch the critters in the "World" window and see whether they are behaving as they should (for example, by eating plants and moving when they have emptiness around them). Second, you can select "Show strengths frame" in the "Options" menu to bring up a window which displays the strengths of all of the critters in the world as horizontal bars. Third, you can select "Show genomes frame" in the "Options" menu to bring up a window which displays the genomes of all of the critters in the world as horizontal bands of rectangles, one for each position-texture-action value. (If you are running evolution for a long time, you should not leave this window open since it slows the program down considerably.)

You can also study what evolution achieves by turning it on and off. Unselecting "Decide using genes" in the "Behavior" menu causes the critters to decide on actions randomly rather than using the knowledge in their genes. You may be surprised to discover that unless the critters have evolved quite a bit, performance may be superior with the option of behaving randomly. Unselecting "Evolve?" in the "Behavior" causes evolution itself to be turned off; that is, the genomes of new offspring are randomly generated rather than created from their parents' genomes.

You will discover that with many of the possible combinations of parameter settings, the genetic algorithm fails to result in a fit population; within a few generations (even one), all of the critters may have died. In these cases, the world is simply too hard for evolution to find the right solution before it's too late. An interesting alternative is to start with an easier world, for example, one in which the punishment for bumping into things is not so severe and the reward for eating plants is greater, and then have the world become more difficult gradually. You can do this in the program by selecting "Change params" and adjusting the parameters several times during evolution. In this way, you may find that the critters are ultimately able to survive in a world that is even more difficult than one they all die out in at the beginning of evolution.